KEY TAKEAWAYS:

- AI can now autonomously write, test, and ship code

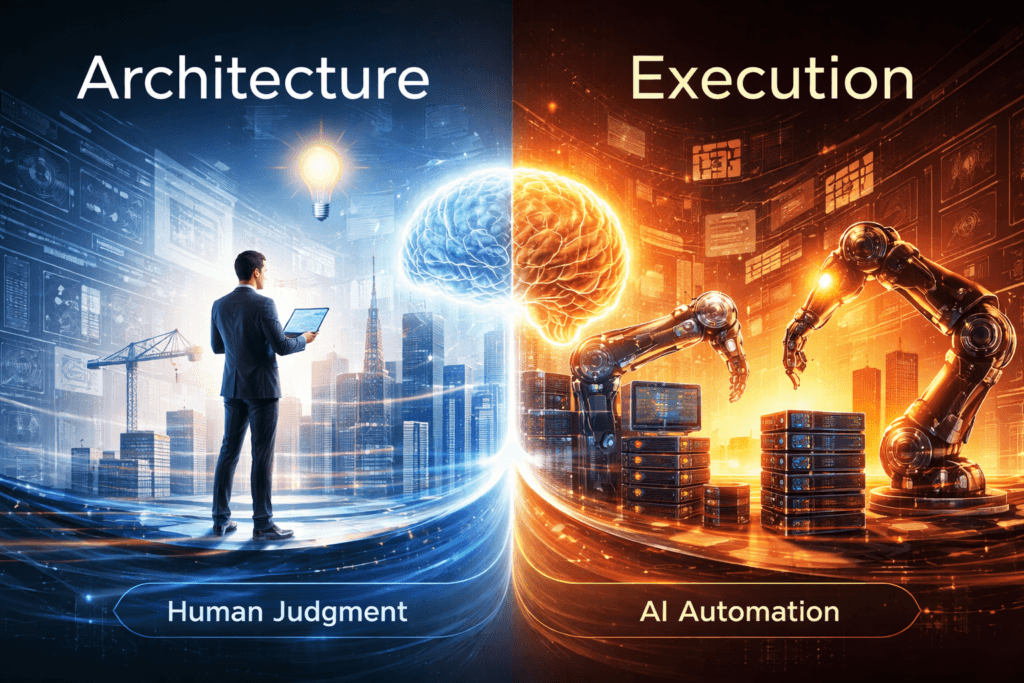

- This commoditizes execution but increases the value of judgment

- Engineers are splitting into Resisters, Over-Trusters, and Orchestrators

- The “Architecture Premium” = judgment, context, and system thinking

- Your career depends on which skills you’re developing now

I spent 3-4 hours on a weekend and built a working product that people actually use. AI wrote most of the code. But that’s not the story. The story is what AI couldn’t do, what it will never do, and why that matters more than ever for your career as an engineer.

Claude Code has been making waves in the developer community lately, and the conversations I’m hearing range from excitement to existential dread.

If you haven’t been following: AI-powered development tools can now connect to your entire development environment. GitHub, your codebase, error logs, project management tools. They don’t just suggest code anymore. They can autonomously write, test, debug, and ship features.

I think both the excitement and the dread are missing the real story.

What’s Actually Changing: The Technical Reality

Modern AI coding tools have evolved dramatically. They can:

- Read your entire codebase and understand architecture

- Pull tickets from your project management system

- Write code, run tests, and iterate autonomously

- Debug production errors by reading logs and pushing fixes

- Understand your team’s patterns and conventions

This isn’t “Copilot suggests the next line.” This is “Claude, fix the authentication bug” and it actually does it, end to end.

That’s the “everything” part.

My Weekend Experiment: Building ThinkPartner

Here’s my perspective: I lead a team of architects and tech consultants, focused on enterprise architecture and transformation strategy. Before moving into leadership three years ago, I spent over twenty years as an architect and enterprise architect myself.

I still build things. Python scripts for my AI/ML doctorate research. Prototypes to test ideas. Products to solve real problems I see.

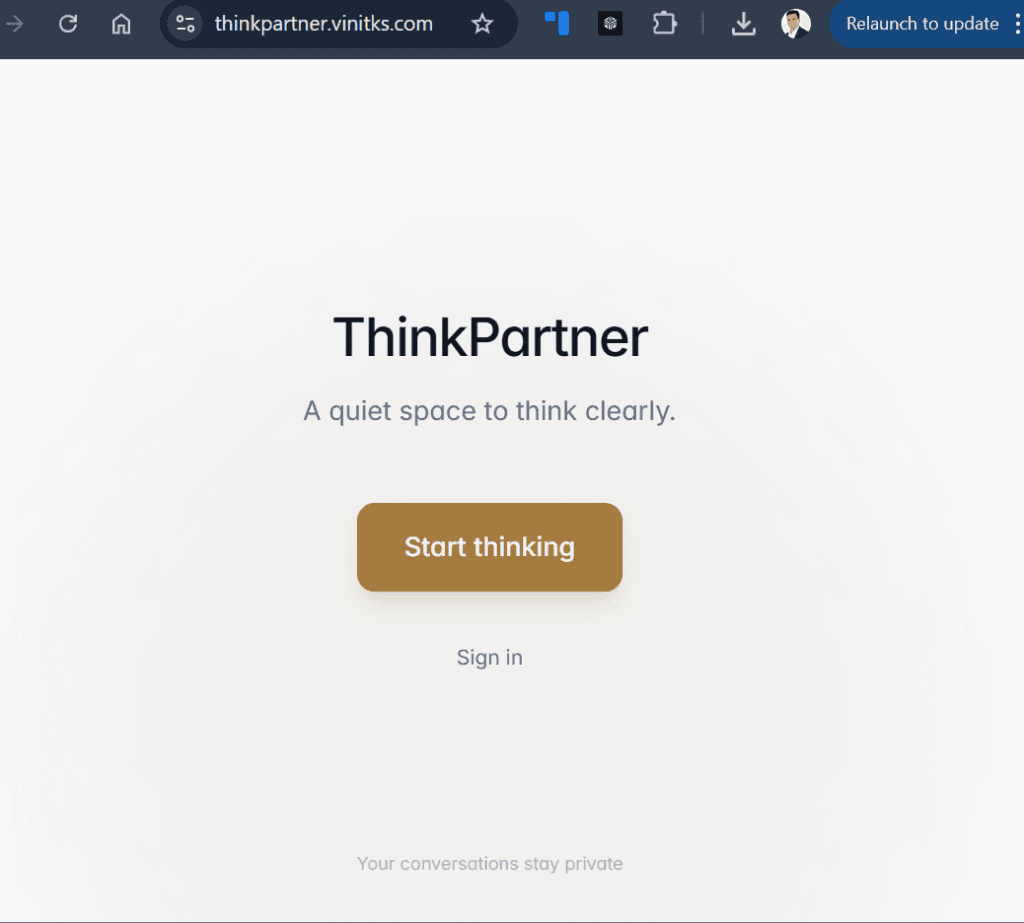

Recently, I built ThinkPartner, a leadership thinking platform with an AI partner named Binny that helps leaders gain clarity and uncover true intent through conversation. Not a task manager. Not a productivity tool. A space for leaders to actually think.

I built it in 3-4 hours on a weekend using Lovable, an AI development tool.

And it works. People use it. But not because I’m a fast coder.

Here’s what really happened:

- Lovable generated probably 90% of the code

- But I decided what problem to solve (leaders don’t lack information, they lack space to think clearly)

- I made the critical choice: conversation-first, no onboarding friction, no login until after value is experienced

- I knew when to override AI’s suggestions because they added friction that would break the experience

- I designed how Binny should shift fluidly between guiding, mentoring, and coaching based on context

- I caught the psychological nuances, like framing feedback as reflection, not evaluation

“The code was the commodity. The understanding of what leaders actually need? That was the value.”

That’s the “nothing” part. The fundamentals haven’t changed.

The Pattern I’m Seeing: Three Types of Engineers

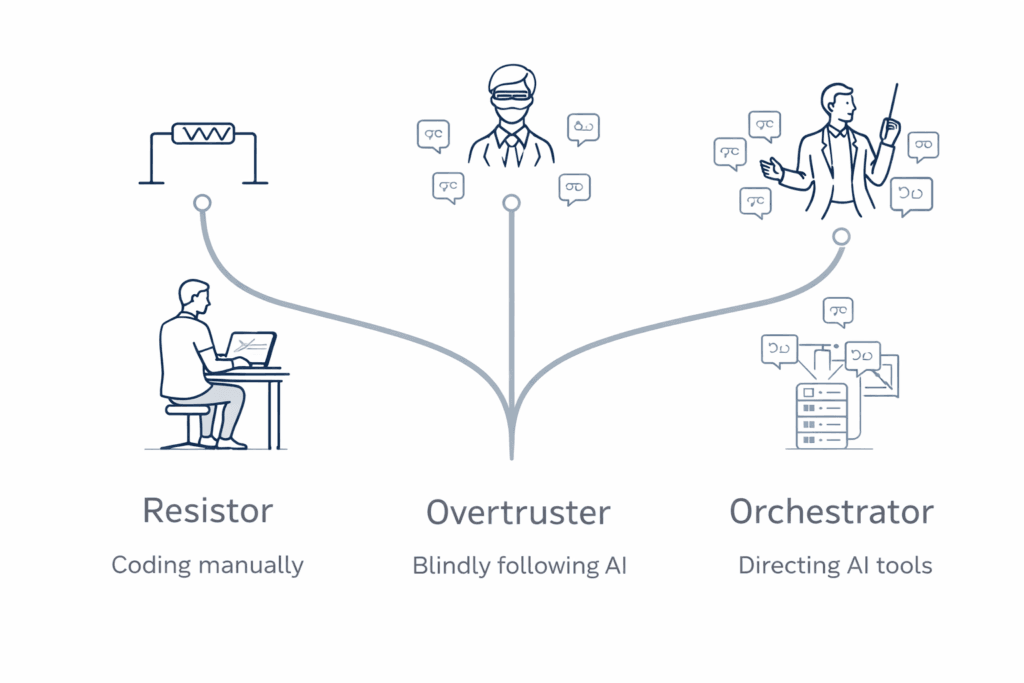

Engineers are splitting into three distinct camps:

1. The Resisters

“This is overhyped, I can code faster myself”

Maybe true today. But they’re optimizing for the wrong metric. Speed of execution is being commoditized. These engineers are competing on a dimension that’s losing value daily.

2. The Over-Trusters

“AI can handle it, I’ll just review”

They’re about to learn expensive lessons in production. They ship fast but discover problems late. They trust the syntax but miss the system implications.

3. The Orchestrators

“AI handles execution, I provide judgment and architecture”

They’re building better products, faster, with less stress. They’ve realized that their value isn’t in typing code, it’s in knowing what to build and why.

I’m actively coaching my team toward that third camp. Because that’s where the sustainable value is.

Understanding the Architecture Premium

It’s simple: as AI commoditizes code execution, value flows to judgment, context, and system thinking.

AI can pull your ticket, write the code, run your tests, and push to GitHub. That’s incredible.

But can it:

- Decide whether you should even build this feature, or if it’ll add complexity without value?

- Understand your users well enough to know what will delight them versus frustrate them?

- Spot the architectural decision that will cause problems six months from now?

- Know when “technically correct” code will create a maintenance nightmare?

- Navigate the organizational context of why this feature matters to stakeholders?

That’s the architecture premium. That’s human judgment. That’s what hasn’t changed.

A Real Example: When Perfect Code Isn’t Good Enough

A friend who’s a principal engineer recently showed me code that Claude generated for their team. It was perfect. Clean, tested, production-ready.

Except it would’ve caused a cascading failure in their distributed system at scale.

Claude didn’t know their traffic patterns. Claude didn’t know their infrastructure constraints. Claude hadn’t lived through their previous outages.

My friend knew those things. That knowledge, earned through experience and battle scars, is worth more than execution speed.

This is the pattern I keep seeing: AI can generate syntactically correct code, but it doesn’t have the context that comes from living with a system in production.

The Uncomfortable Truth About Your Career

If your value proposition is “I execute tickets efficiently and write clean code,” you’re competing with tools that cost $20/month and never sleep.

That’s everything changing.

But if you can say:

- “I understand this system deeply enough to know what will break”

- “I know what users need before they articulate it”

- “I make good architectural decisions under uncertainty”

- “I’ve seen these patterns fail before, and here’s why”

You’re capturing the architecture premium. And nothing has changed. Those skills were always the most valuable. They’ve just become the only defensible skills.

What Experience Actually Teaches

Throughout my years as an architect and enterprise architect, I learned that success was never about execution speed. It was about understanding what problems are worth solving, making architectural choices that scale both technically and organizationally, and knowing what “good” looks like from years of seeing what fails in production.

ThinkPartner proved it again: you can build valuable products without writing most of the code yourself. Because building was never really about syntax. It was about thinking.

When I write Python for my research, the code is a tool for exploring ideas, not the end goal. The insight is the goal.

The same is true for consulting work. The deliverable isn’t the code. It’s the strategic insight about what to build and how to build it sustainably.

What I Tell My Team

Before you build something (or ask AI to build it), always ask:

- Why are we building this? What’s the real problem?

- What’s the simplest solution that delivers actual value?

- What are we assuming that could be catastrophically wrong?

- How will this behave when it hits real users and real scale?

“That’s the architecture premium. Everything else is execution.”

The Bottom Line

We’re not being replaced. We’re being sorted.

Into those who see engineering as execution, and those who see it as architecture.

Into those who compete on speed, and those who compete on judgment.

Into those who write code, and those who solve problems.

The best engineers were always the ones with judgment, not just speed. The ones who understood systems, users, and trade-offs. The ones who knew what not to build. Those skills were always the premium. AI just made them impossible to ignore.

AI didn’t create that division. It just made it undeniable.

What To Do This Week

If you’re an engineer:

Review your last three projects. How much was execution vs. architectural judgment? If it’s mostly execution, you’re building the wrong skills.

Identify one area where you have context AI doesn’t. Domain knowledge, system history, user insights. Double down on that.

Practice articulating the “why” behind your technical decisions. In code reviews. In design docs. In conversations with your team.

If you’re a leader:

Audit your team’s skills. Who’s optimizing for speed vs. judgment? Who asks the best questions?

Create space for architectural thinking, not just ticket completion. Change how you measure productivity.

Start hiring and promoting based on demonstrated judgment, not just delivery velocity.

The sorting is happening now. Choose deliberately.